Replacing my Synology DS1515+

Nine years ago, I migrated all my local house storage from a massive Dell with Debian and software RAID, onto a tiny little Synology NAS. Well, not exactly tiny, but probably 1/3 of the volume of the Dell. It serves as a file server, Time Machine target, and destination for various rsync and other low-level backup tasks from the rest of the network. At other times, it’s run a Plex server, the Channels DVR, and…I honestly don’t remember what else I’ve experimented with here. It’s a pretty capable little box.

Oh, as you can see in the title, it’s a DS1515+.

Then on Christmas Eve, I started getting warnings:

eldamar ran into a problem and was shut down improperly. This could be caused by power failure or other reasons and may result in severe data loss. Therefore, we highly recommend using an SNMP or USB UPS to protect your device and data if you don't already have one installed.

(Eldamar is the hostname. Most of my systems are Middle-Earth themed. Because you gotta have a theme, and I like Tolkien.)

Anyway, I kind of brushed it off – I checked the admin interface, things looked good, and besides, it was the holidays, so I was a little distracted. I got a similar message on the 26th, then again on January 7. At this point I’m starting to wonder, but I was also neck-deep in other things. So again, I let it mostly slide (I mean, it was up and running fine, so…okay?)

Finally, a week ago (the 22nd), it happened twice in 24 hours. And I finally had some time to look into it.

Troubleshooting

The first thing I did was to see what Synology had to say about the situation. The NAS was already on a UPS, and though I don’t have any logging, I was 99.99% sure the UPS never shut down (the house hadn’t lost any power, and the rest of my network is on the same circuit, so it’d have to be a complete, and silent, UPS failure). Which means there’s a software problem. Synology’s help pages suggested I try a memory scan – which I did. Which took a few hours. Came up fine.

They suggested that I should turn off the “Automatically restart after a power failure” switch, which could help distinguish between a failure in the power supply and something software related. So I did that, and let the device continue scrubbing the drives overnight.

The next morning, I came downstairs, and it was off. Okay, so that means it’s almost certainly a power supply thing. I try to turn it back on. Nothing. More digging commences. And I find three interesting hardware-related issues with the unit.

-

There’s a transistor which sometimes fails. I believe it basically latches the momentary low-voltage “power on” signal that comes from pushing the power button, and if it fails, the power supply never gets the “turn on for REAL and stay on” message. (remember when power switches actually switched power? good times. OTOH, I guess this lets things like Wake On LAN exist, so…progress?)

-

There’s a known issue with the CPU in this unit: The Intel ATOM C2000. Apparently, the clock in the chip (basically, the timing chain that the all the parts of the chip dance along to) can gradually decline, leading to a point where it just basically fails. There’s a fix for this: apply a 100 ohm resistor across two specific pins. Well, “fix” is generous. This just slows down the process. If you’ve got a unit that’s suddenly showing symptoms of this bug (randomly crashing), adding the resistor can bring it back to reliable service, at least for a while. (Keep this in mind, it’ll come up again later.)

-

The power supply itself could be well and truly dead.

So, let’s open the box up. First, I try the trick suggested for the transistor - bridge two of the pins together, and see if it powers up again. Doesn’t work. Okay, maybe that lends credence to the power supply being totally borked. But let’s keep looking….

Oh. The resistor fix is already in place. So that won’t be a quick fix. It also means that I’ve definitely got the bad chip, and it’s had nine years to degrade. Maybe the power supply conclusion isn’t as rock solid as I thought.

At this point, I’d found that I could get a new power supply for like $70, which wasn’t too bad. Certainly better than $700 for a whole new unit. But if the CPU is on its last legs (and at this point, it pretty much has to be), there’s not much point in throwing an additional $70 at it. Is there anything else I can try? Turns out, there is. If you bridge two wires on the power supply cable, it forces the power supply to fully activate. (They call this the “paper clip” trick, because you can fold a paper clip into a U shape and just shove it into the connector, bridging the correct two pins).

I try this. And the power button starts blinking with that friendly blue light… Blinking constantly. Nothing else. Pushing it doesn’t do anything, pulling the power cord out and back in again doesn’t change anything. A few online posts tell me that the rapid blinking means there’s a logic board issue…which brings me back to the CPU.

So, yeah. I think the CPU has finally crapped out. Time to buy a new unit.

Enter The DS1522+

Turns out, there’s basically a drop-in replacement - the 1522+.

Here’s where I mention that I love the Synology naming scheme. Well, parts of it, anyway. There’s DS and XS and J and other nomenclature that doesn’t really mean anything to me. I know the features I want are in the DS line, so that’s all I look for. The leading 1 is for…I don’t know. Probably something important. The 5 means 5 bays, and the trailing 15 (or 22) is the year the model was introduced. So DS1515+ is a 5 bay unit introduced in 2015, and the 1522+ is the same thing, first introduced in 2022. It’s now 2025, so this isn’t brand-spanking-new, but it’s not 10 years old, either, and I doubt they’ll update it anytime soon. So I’m good buying the 1522. (The + means something too, but again, I can’t remember exactly what. Really, this digression is just about how easy it is to see size (bay count) and design age (year)).

Anyway. I ordered the 1522+, and after some unexplained Amazon delivery days (should I be concerned that it spent 36 hours in a facility an hour from the NSA?), it arrived midday yesterday. I opened it up, pulled out all the parts, and compared it to the old unit. It’s nearly identical – lights & buttons have moved, and the drive carriers themselves have changed a little, and the power supply is now an external power brick, but otherwise…it’s as close to a drop-in replacement as one can ask for.

DS1515+ sitting on top of the DS1522+

I’m a little annoyed that the carriers don’t work in the new box, but I guess they added support for 2.5" drives, and moving the drives is a tool-free experience anyway (unsnap a couple rails, move the drives, snap them back into place). So I get over my annoyance, and get the drives moved pretty quickly. I should note that I labeled all the drives as I removed them (from “1 - left” to “5 - right”) because I suspected it would be important to put them back in the right order.

Then I plugged in the ethernet cable, and the power, and hit the power button. Things went all flash-flashy, then the drive & status lights started blinking all at once. Kinda angry looking, but in a friendly-angry sort of way? So I waited.

Eventually, I decided it must be waiting for me, and I tried to connect through the web browser. [I’ll note here that this would surely have been more obvious to me if I’d read any documentation. But you know I wouldn’t actually do that, right?] Anyway, no connection through the browser. Doesn’t respond to ping. Oh, it’s probably pulling a different IP from DHCP – but I vaguely remembered there was a better way to find it, and I finally RTFMd.

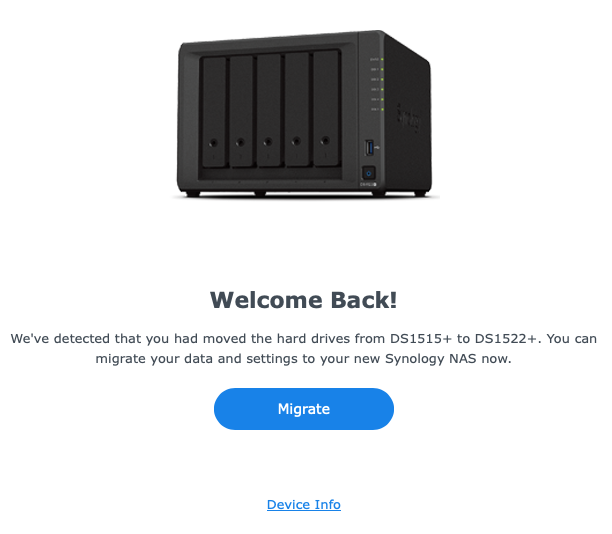

Turns out, I had to go to synologynas.local:5000 (for macs – for other devices, it’s a different name). And boom, there’s a “Welcome Back!” screen!

Waiting patiently....

The “Device Info” link gives me important information, like IP and MAC address, so I can update my DHCP server. For now, though, I just hit the big Migrate button and wait. It tells me it’ll keep all my data, asks if I want to keep the current configuration or start from scratch (I’ll keep the config), and then downloads the newest operating system and begins the migration. A big countdown timer shows up with 10 minutes on the clock, and I wait. Meanwhile, I fixed the DHCP entry, and as it was nearing completion, I tried to connect to the server in a different window – there’s a login screen! It must be done already.

Nonetheless, I let the countdown finish, then it pops up a “Something went wrong” error message, with “please click button to reconnect.” Which it doesn’t. Maybe the app in the web page is still looking at the old IP address, so I go back to the other window and log in, and all is well. I kinda yanked the rug out from under it, so I won’t hold that one against them. So far, this is as seamless as you could want. Oh, it beeped once – I had no idea if that was good or bad at the time, but looking back, that must’ve been when it finished and automatically rebooted.

So, including unpacking, cabling, and physical disk transfers, the who process probably took under 20 minutes. Nice.

Fine Tuning

Now, I’m in the admin interface, and everything is looking pretty good. It’s running a data scrub again, which I expected. I found a few things which were broken. For example, I have a 2-drive Synology as a local backup (called mandos - get it? halls of the dead? I’m clever.). Looks like that sync broke late last month, too… Some investigation eventually tells me that the sync software updated, but the node.js package was out of data. I fix that, rejigger some configs on both units to nudge them back into compliance, and data flows.

There were a couple other things like that, mostly due not to the last unit failing, but to, basically technical debt and neglect. I also hard-code the IP address (I have no idea why I left that on DHCP), and generally…I think it’s all good? Or at least Good Enough. CloudSync to BackBlaze is working (Remember the 3-2-1 rule: 3 copies, two of them on different local media, and 1 offsite). Other services seem to be good. I make a mental note to check a few more details over the next couple days (like, I can delete that Channels DVR package), and call it a day.

Conclusion

Overall, this migration was dead simple. It certainly helps that it’s basically the same model, just 7 years newer. Obviously, since I was using 5 drives before, I needed a unit with at least 5 bays. I think I should’ve been able to get any new unit with more bays and it would’ve worked just fine, but the next size up was nearly double the price, and for my basement NAS, I really don’t need that level of power.

I have heard that some of the Synology models will only work with Synology-branded drives. In fact, even for this model, they provide a compatibility list of Synology & select 3rd party drives. Quick research didn’t look like it would be a problem to move my existing drives over – and if it were a problem, I’d have bigger problems anyway, since I couldn’t get the old unit to boot. But that’s definitely something I’ll have to keep in mind when I next upgrade the drives, or if I want to get another NAS for something else.

But in general, I can definitely say that I was really impressed with how simple and painless this transition was. Others may have different experiences, but DS1515+ to DS1522+ using 8TB Seagate Ironsomethingorothers worked just flawlessly.

(Okay, in the interests of Science, they’re Seagate IronWolf NAS ST8000VN0022-2EL112 (4 drives) and ST8000VN004-2M2101 (1 drive).)