Put away the tin-foil: The Apple unlock case is complicated enough

Apple and the FBI are fighting. The {twitter, blog, media}-‘verses have exploded. And FUD, confusion, and conspiracy theories have been given free reign.

Rather than going into deep technical detail, or pontificating over the moral, legal, and ethical issues at hand, I thought it may be useful to discuss some of the more persistent misinformation and misunderstandings I’ve seen over the last few days.

Background

On February 16, 2016, Apple posted A Message to Our Customers, a public response to a recent court order, in which the FBI demands that Apple take steps to help them break the passcode on an iPhone 5C used by one of the terrorists in the San Bernardino shooting last year.

All this week, Twitter, and blogs, and tech news sites, and mainstream media have discussed this situation. As it’s a very complex issue, with many subtle aspects and inscrutable technical details, these stories and comments are all over the map. The legal and moral questions raised by this case are significant, and not something I’m really qualified to discuss.

However, I am comfortable ranting at a technical level. I’ve already described this exact problem in A (not so) quick primer on iOS encryption (and presented a short talk at NoVA Hackers). One of the best posts particular to the current case can be found at the Trail of Bits Blog, which addresses many of the items I discuss here in more technical detail.

Apple (and many others) have been calling this a “back door,” which may or may not be an over-statement. It’s certainly a step down a slippery slope, whether you consider this a solution to a single-phone case, or a general solution to any future cases brought by any government on the planet. But, again, I’m not interested in discussing that.

Emotions are high, and and knowledge is scarce, which leads to all kinds of crazy ideas, opinions, or general assumptions being repeated all over the internet. I’m hoping that I can dispel some of these, or at least reduce the confusion, or at a bare minimum, help us to be more aware of what we’re all thinking, so that we can step back and consider the issues rationally.

Technical Overview

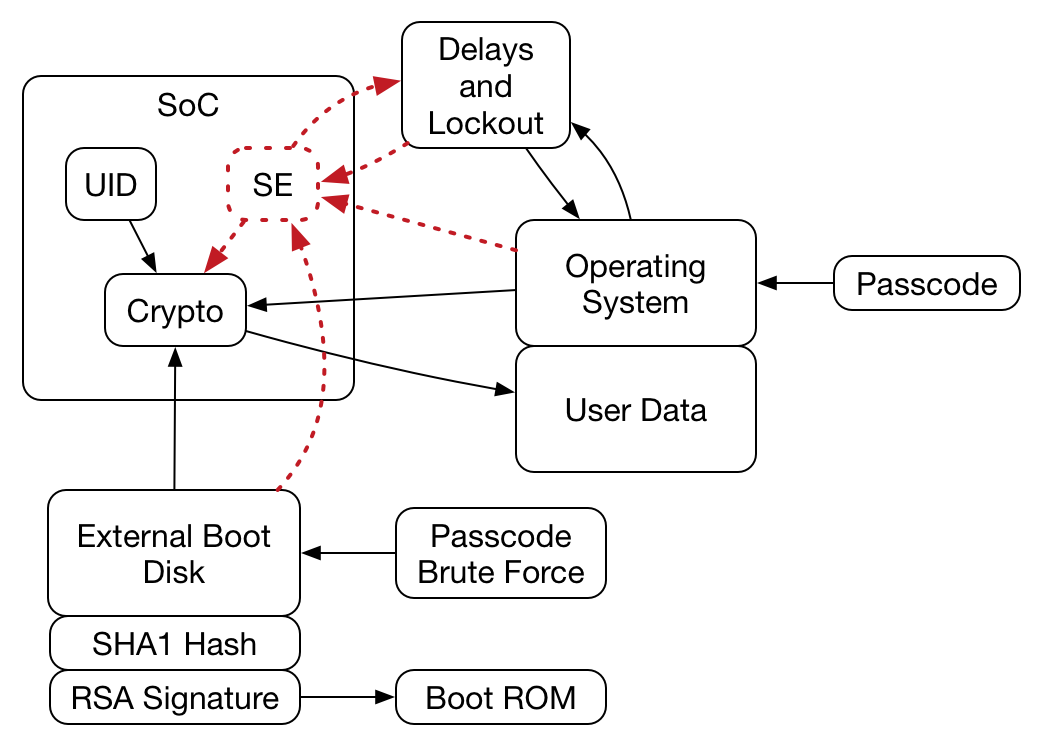

First, a very high-level description of how one unlocks an iPhone. This is a very complicated system, and the blog posts (and slide deck) that I linked to above provide much better detail than what I’ll go into here. But hopefully this diagram and a short bullet list can give enough detail that the rest of the post will make at least some sense.

Simplified passcode logical flow

To unlock an iPhone (or iPad or iPod Touch):

- The user enters a passcode on the operating-system provided lock screen

- The OS sends that passcode to a cryptographic engine on the device System on a Chip (The “SoC”, containing the CPU and other important bits of hardware. This is what Apple brands “A6” and so forth).

- The crypto engine combines the passcode with a Unique Identifier (UID), a random number embedded directly in the SoC and unique to each individual device

- The result of this combination is a uniquely-derived key that can begin to unlock any encrypted user data on the device

- If the user enters the wrong passcode, the operating system adds one to the “bad guess” counter, and if there have been enough bad guesses, locks the user out for some period of time (up to an hour between guesses)

- If the user hits a maximum threshold of bad guesses (by default, 10 tries), it either locks the device totally (requiring iTunes to unlock) or wipes it clean

In later devices (iPhone 5S, iPad 3, and later), the management of the bad guess counter and timeout delays are handled by the Secure Enclave (SE), another processor on the SoC with its own software.

So, if you want to unlock an iPhone, but don’t know the passcode, how can you unlock it?

- Guess the right passcode in fewer than 10 tries

- Brute force the output of the key derivation crypto function (a 256-bit AES key)

- Physically dismantle the SoC so you can read the UID by directly inspecting the silicon gates with an ultra-high-powered microscope. Then, you can brute force the passcode.

- Modify the operating system to ignore bad guesses.

The last is the easiest, but Apple didn’t make it that easy. To modify the operating system on the device, you first have to defeat the default full-disk-encryption, which is also based on the UID (and beyond the scope of this post), so we’re back to a microscope attack.

Or you could boot from an external hard drive. Unfortunately for hackers and law enforcement (but good for iOS users), the iPhone won’t boot from just any external drive. The external image has to be signed by Apple.

This is exactly what the FBI is asking Apple to do (and, incidentally, a boot ROM bug in iPhone 4 and earlier allowed hackers to do this too, which is how we know it’s possible). The basic approach is this:

- Apple creates a minimal boot disk image with a user shell and libraries to interface with the passcode systems

- This image is then signed with Apple’s private key

- The target iPhone is booted from the external image

- A program on the image filesystem runs a passcode brute force attack

- It submits a passcode to the system in the same way the real OS does

- But if the passcode fails, it doesn’t bother keeping count

- When the right passcode is found, it’s displayed to the user

- The user writes down the passcode, reboots the phone from the internal disk, and uses the passcode to unlock the phone

Beginning with the iPhone 5S, some of the passcode processing functionality moved into the Secure Enclave, so this attack would need to be modified to remove the lockouts from the SE as well.

Note that these methods still require the passcode to be brute-forced. For a 4-digit number, that can happen in as little as 15 minutes, but for a strong passcode, it can take days, months, or even years (or centuries). So even with a signed boot image, this attack is far from a silver bullet.

Confusion!

That’s (basically) the attack that Apple is being asked to perform. Now to address some of the more confusing points and questions circulating this week:

Just crack the passcode on a super fast password cracking machine! That can’t be done, because the passcode depends on the Unique ID (UID) embedded within the SoC. This UID cannot be extracted, either by software or by electronic methods, so the password-based keys can never be generated on an external system. The brute-force attack must take place on the device being targeted. And the the device takes about 80 milliseconds per guess.

Look at the BUGS, MAN! Yes, iOS has bugs. Sometimes it seems like a whole lot of bugs. Every major version of iOS has been jailbroken. But all these bugs depend on accessing an unlocked device. None of them help with a locked phone.

What about that lockscreen bypass we saw last {week / month / year}? These bypasses seem to pop up with distressing frequency, but they’re nothing more than bugs in (essentially) the “Phone” application. Sometimes they’ll let you see other bits of unencrypted data on a device, but they never bypass the actual passcode. The bulk of the user data remains encrypted, even when these bugs are triggered.

But {some expensive forensics software} can do this! Well, maybe it can, and maybe it can’t. Forensics software is very closely held, and some features are limited to specific devices, and specific operating system versions. One system that got some press last year exploited a bug in which the bad guess counter wasn’t updated fast enough, and so the system could reboot the phone before the guess was registered, allowing for thousands of passcode guesses. (Also, as far as we know, all those bugs have been fixed, so this only works with older versions of iOS).

If Apple builds this, then Bad Guys (or the FBI, which to some may be the same thing) can use this everywhere! Well, not necessarily. Apple could put a check in the external image that verifies some unique identifier on the phone (a serial number, ECID, IMEI, or something similar). Because this would be hard-coded in a signed boot image, any attempts to change that code to work on a different phone would invalidate the signature, and other phones would refuse to boot. (What is true, though, is that once Apple has built the capability, it would be trivial to re-apply it to any future device, and they could quickly find themselves needing a team to unlock devices for law enforcement from all around the world…but that goes back into the cans of worms I’m not going to get into today).

NSA. ‘Nuff said. Who knows? (more on that below)

What about the secret key? Isn’t it likely that the Advanced Persistent Threat has it anyway? If the secret key has been compromised, then, yeah, we’re back to the state we were with iPhone 4 and hacker-generated boot images. But the attacker still needs to brute force the passcode on the target device. And, frankly, if that key has leaked, then Apple has far, far bigger problems on their hands.

Later devices which use the Secure Enclave are safe!! Possibly. Possibly not. I see a few possibilities here:

- The timeout / lockout is written in silicon and can’t be updated or bypassed: No software attacks will get around it, and the phone will always be limited to 10 attempts. End of story.

- The lockouts are implemented in an upgradeable Secure Enclave firmware, but the firmware can’t be updated if the device is locked: Again, this attack fails. But a normal OS update to an unlocked phone can change this at any time, restoring the attack for future use.

- The SE firmware can be updated even on a locked phone, using a trusted boot disk: This is, functionally, no different from today’s attack, it’s just a little more complicated.

Can anyone outside of Apple do this?

So, what about the NSA? Or China? Or zero-day merchants? Surely they have a way to do this, right?

We don’t know.

If there’s a way to do this, it would require one (or more) of the following:

- A compromised Apple secret key to sign a hacked external boot image

- A major cryptographic break in either RSA signatures or SHA1 hashes (allowing a forged signature)

- A new boot ROM bug that allows booting from an unsigned image

- A major cryptographic break in the key derivation function (where the UID and passcode are combined) or in AES itself (to directly decrypt the user data)

- A physical attack on the device processor, to directly view the UID at a microchip circuit level (theoretically possible today, but I don’t know if anyone’s publicly admitted to pulling it off)

- A new bug in the operating system that bypasses the bad guess counter (which would not apply to newer devices), or a similar bug in the Secure Enclave (which would work on newer devices)

- A way to update the SE on a locked device (which we currently presume requires a way to get past the external boot disk signature checks)

All of these are theoretically possible, but none seem terribly likely (other than an OS-level bug on older devices). In fact, the most reasonable (and least disturbing) possibility, to me, is the direct physical attack on the chip. Even that might be preventable, but I Am Not A Chip Designer and could only speculate on how.

Bottom Line

There’s still a lot we don’t know. In fact, I think this list is pretty much what I wrote in 2014:

- It seems that Apple should be able to perform this attack, based on what we used to be able to do with iPhone 4 and earlier

- It’s possible some things have changed and the attack is no longer feasible (only Apple knows for sure)

- We don’t know whether this attack can happen on devices with the Secure Enclave: Can the timeouts be removed with a SE firmware change? Can that happen on a locked device?

Much of this we’ll never know, unless Apple explicitly tells us in a new update to their iOS Security Guide. And even then, we’ll probably only have their word for it, because many of these questions can’t be independently verified without that trusted external boot image.