DLP Considered Harmful - A Rant about Reliable Certificate Pinning

[Note: Yes, I understand the point of DLP. Yes, I’m being unrealistically idealistic. I still think this is wrong, and that we do ourselves a disservice to pretend otherwise.]

The Latest Craziness

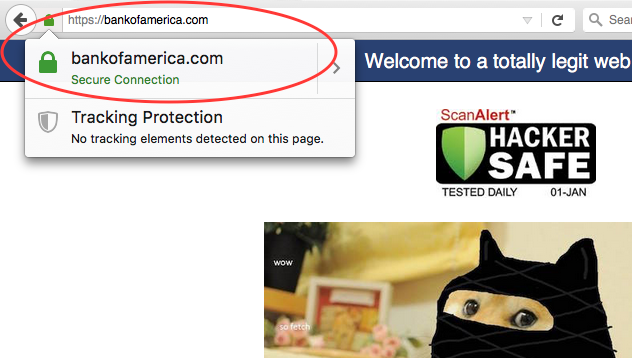

It is happening again. A major computer manufacturer (this time, Dell, instead of Lenovo) shipped with a trusted root TLS CA certificate installed on the operating system. Again, the private key was included with the certificate. So now, anyone who wants to perform a man-in-the-middle attack against users of those devices can easily do so.

Any domain, any site (Image by Kenn White (@kennwhite))

But as shocking as that may have been, what comes next may surprise you!

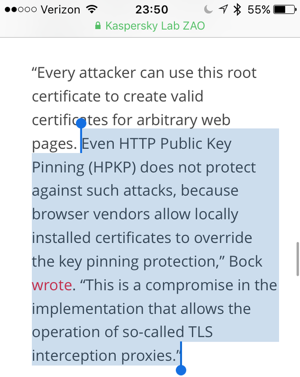

Browsers let local certs override HPKP

Data Loss Prevention and Certificate Pinning

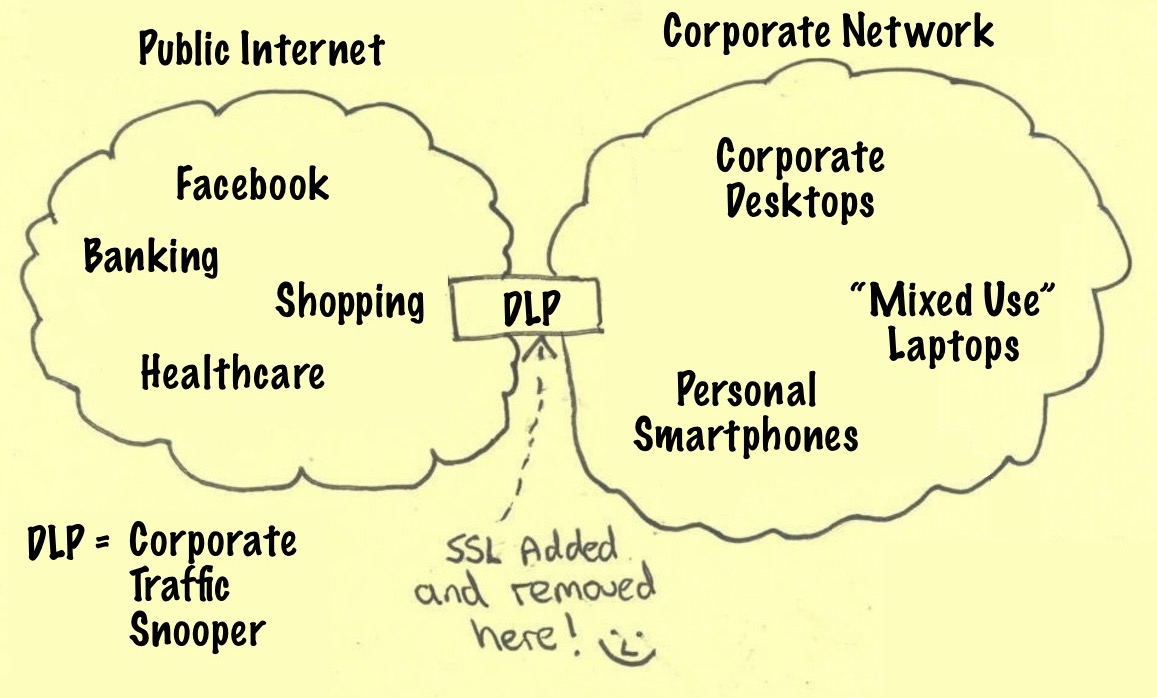

It’s (reasonably) well known that many large enterprises utilize man-in-the-middle proxies to intercept and inspect data, even TLS-encrypted data, leaving their networks. This is justified as part of a “Data Loss Prevention” (DLP) strategy, and excused by “Well, you signed a piece of paper saying you have no privacy on this network, blah blah blah.”

However, I had no idea that browser makers have conspired to allow such systems to break certificate pinning. (and apparently I wasn’t the only one surprised by this).

Certificate pinning can go a long way to restoring trust in the (demonstrably broken) TLS public key infrastructure, ensuring that data between an end user and internet-based servers are, in fact, properly protected.

It’s reasonably easy to implement cert pinning in mobile applications (since the app developer owns both ends of the system – the server and the mobile app), but it’s more difficult to manage in browsers. RFC 7469 defines “HPKP”, or “HTTP Public Key Pinning,” which allows a server to indicate which certificates are to be trusted for future visits to a website.

Because the browser won’t know anything about the remote site before it’s visited at least once, the protocol specifies “Trust on First Use” (TOFU). (Unless such information is bundled with the browser, which Chrome currently does for some sites). This means that if, for example, the first time you visit Facebook on a laptop is from home, the browser would “learn” the appropriate TLS certificate from that first visit, and should complain if it’s ever presented with a different cert when visiting the site in the future, like if a hacker’s attacking your connection at Starbucks.

But some browsers, by design, ignore all that when presented with a trusted root certificate, installed locally:

Chrome does not perform pin validation when the certificate chain chains up to a private trust anchor. A key result of this policy is that private trust anchors can be used to proxy (or MITM) connections, even to pinned sites. “Data loss prevention” appliances, firewalls, content filters, and malware can use this feature to defeat the protections of key pinning.

CWe deem this acceptable because the proxy or MITM can only be effective if the client machine has already been configured to trust the proxy’s issuing certificate — that is, the client is already under the control of the person who controls the proxy (e.g. the enterprise’s IT administrator). If the client does not trust the private trust anchor, the proxy’s attempt to mediate the connection will fail as it should.

What this means is that, even when a remote site specifies that a browser should only connect when it sees the correct, site-issued certificate, the browser will ignore those instructions when a corporate DLP proxy is in the mix. This allows the employer’s security team to inspect outbound traffic and (they hope) prevent proprietary information from leaving the company’s network. It also means they can see sensitive, personal, non-corporate information that should have been protected by encryption.

This Is Broken

I, personally, think that’s overstepping the line, and here’s why:

[ranty opinion section begins]

The employer’s DLP MITM inspecting proxy may be an untrusted third party to the connection. Sure, it’s trusted by the browser, that’s the point. But is it trusted by the user, and by the service to which the user is connecting?

If, for example, a user is checking their bank account from work (nevermind why, or whether that’s even a good idea). Does the user really want to allow their employer to see their bank password? Because they just did. Does the bank really want their customer to do that? Who bears the liability if the proxy is hacked and banking passwords extracted? The end-user who shouldn’t have been banking at work? The bank? The corporation which sniffed the traffic?

A corporation has some right to inspect their own traffic, to know what’s going on. But unrelated third parties also have a right to expect their customers' data to be secure, end-to-end, without exception. If this means that some sites become unavailable within some corporate environments, so be it. But the users need be able to know that their data is secure, and as it stands, that kind of assurance seems to be impossible to provide.

Users aren’t even given a warning that this is happening. They’re told it could happen, when they sign an Acceptable Use Policy, but they aren’t given a real-time warning when it happens. They deserve to be told “Hey, someone is able to access your bank password and account information, RIGHT NOW. It’s probably just your employer, but if you don’t trust them with this information, don’t enter your password, close the browser, and wait until you get to a computer and network that you personally trust before you try this again.”

SSL Added And Removed Here

[end ranty section]

It’s Bigger Than Just The Enterprise

Unfortunately, it’s not just large corporations which are doing this kind of snooping. Just a few days ago, I was at an all-night Cub Scout “lock-in” event for my eldest son, at a local volunteer fire department. They had free Wi-Fi. Great! I’m gonna be here all night, might as well get some work done in the corner. Imagine my surprise when I got certificate trust warnings from host “168.168.0.0”. The volunteer fire department was trying to MITM my web traffic.

Fortunately, they didn’t include any “click here to install a certificate and accept our Terms of Use” kind of captured portal, so the interception failed. If it had, I certainly wouldn’t have used the connection (and as it was, I immediately dropped it and tethered to my phone instead). But how many people would blindly accept such a certificate? How many “normal people” are putting their banking, healthcare, email, and social media identities and information at risk through such a system, every day? This sort of interception has been seen at schools, on airplanes, and many other places where “free” Wi-Fi is offered.

In my job, I frequently recommend certificate pinning as a vital mechanism to ensure that traffic is kept secure against any eavesdropper. Now, suddenly, I’m faced with the very real possibility that there’s no point, because we’re undermining our own progress in the name of DLP. Pinning can make TLS at least moderately trustworthy again, but if browsers can so easily subvert it, then we’re right back where we started.

Finally, though I’m not usually one to encourage tin foil hat conspiracy theories…with all the talk about companies taking the maximum possible steps to protect their users' data, with iPhone and Android encryption and the government complaining about “going dark”… a DLP pinning bypass provides an easy way for the government to get at data that users might otherwise think is protected. Could the FBI, or NSA, or <insert foreign intelligence or police force> already be requesting logs from corporate MITM DLP proxies? How well is that data being protected? Who else is getting caught up in the dragnet?

Cognitive Dissonance FTW

On the one hand, we as an industry are:

- Advocating strongly for the maximum possible privacy and security protections for users' data

- Developing and promulgating solutions such as certificate pinning and HPKP to ensure connections are secure and trusted

- Loudly complaining when government entities push for a “back door” into such data, either at rest or in transit

But at the same time, we:

- Tell enterprises that “hackers are stealing your data”

- You need to inspect everything leaving your network so you can catch these hackers

- To do this, simply install this “back door” on your network

- It’s okay to ignore everything we said about privacy and encryption…just add a disclaimer when people get on your network

I think this is a lousy situation to be in. Who do we fight for? What matters? And how do we justify ourselves when we issue such contradictory guidance? How can we claim any moral high ground while fighting against government encryption back doors, when we recommend and build them for our own customers? How can our advice be trusted if we can’t even figure this out?

I hope and believe that in the long run, users and services will push back against this. (And, as I said at the beginning, I know that I’m probably wrong.) I suspect it will begin with the services – with banks, healthcare providers, and other online services wanting HPKP they can trust, corporate DLP polices be damned. Who knows, maybe this will be the next pressure point Apple applies.

When that happens, I just hope we can offer a solution to the data loss problem that doesn’t expect a corporation to become the NSA in order to survive.